Facebook and Google are trying to create artificial intelligence that mimics the human brain. First, they need to figure out how our own minds work

Machines contain the breadth of human knowledge, yet they have the common sense of a newborn. The problem is that computers don't act enough like toddlers. Yann LeCun, director of artificial intelligence research at Facebook, demonstrates this by standing a pen on the table and then holding his phone in front of it. He performs a sleight of hand, and when he picks the phone up—ta-da! The pen is gone. It’s a trick that’ll elicit a gasp from any one-year-old child, but today's cutting-edge artificial intelligence software—and most months-old babies—can’t appreciate that the disappearing act isn’t normal. “Before they’re a few months old, you play this trick on them, and they don’t care,” says LeCun, a 54-year-old father of three. “After a few months, they figure out this is not normal.”

One reason to love computers is that, unlike many kids, they do as they’re told. Just about everything a computer is capable of was put there by a person, and they've rarely been able to discover new techniques or learn on their own. Instead, computers rely on scenarios created by software programmers: If this happens, then do that. Unless it's explicitly told that pens aren't supposed to disappear into thin air, a computer just goes with it. The big piece missing in the crusade for the thinking machine is to give computers a memory that works like the grey gunk in our own heads. An AI with something resembling brain memory would be able to discern the highlights of what it sees, and use the information to shape its understanding of things over time. To do that, the world's top researchers are rethinking how machines store information, and they're turning to neuroscience for inspiration.

This change in thinking has spurred an AI arms race among technology companies such as Facebook, Google, and China’s Baidu. They’re spending billions of dollars to create machines that may one day possess common sense and to help create software that responds more naturally to users’ requests and requires less hand-holding. A facsimile of biological memory, the theory goes, should let AI not only spot patterns in the world, but reason about them with the logic we associate with young children. They’re doing this by pairing brain-aping bits of software, known as neural networks, with the ability to store longer sequences of information, inspired by the long-term memory component of our brain called the hippocampus. This combination allows for an implicit understanding of the world to get “fried in” to the patterns computers detect from moment to moment, says Jason Weston, an AI researcher at Facebook. On June 9, Facebook plans to publish a research paper detailing a system that can chew through several million pieces of data, remember the key points, and answer complicated questions about them. A system like this might let a person one day ask Facebook to find photos of themselves wearing pink at a friend's birthday party, or ask broader, fuzzier questions, like whether they seemed happier than usual last year, or appeared to spend more time with friends.

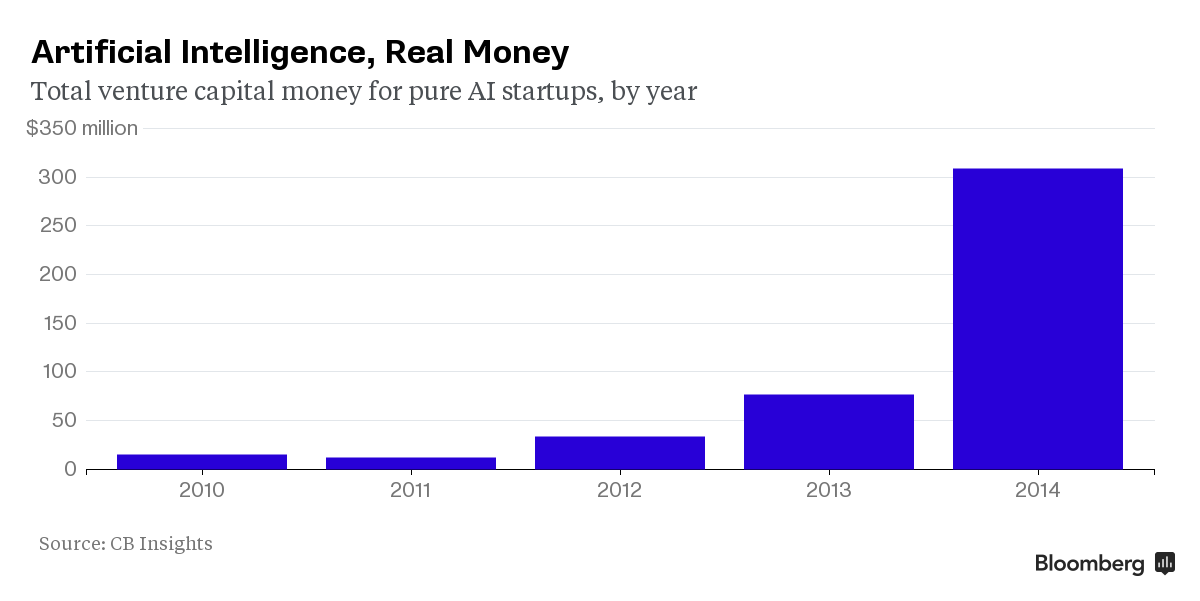

While AI has long been an area of interest for Hollywood and novelists, companies hadn't paid much attention to it until about five years ago. That's when research institutions and academics, aided by new techniques for crunching reams of data, started breaking records in speech recognition and image analysis at an unexpected rate. Venture capitalists took notice and invested $309.2 million in AI startups last year, a twentyfold increase from 2010, according to research firm CB Insights. Some of these startups are helping to break new ground. One in Silicon Valley, called MetaMind, has developed improvements to computers' understanding of everyday speech. Clarifai, an AI startup in New York, is doing complex video analysis and selling the service to businesses.

Corporate research labs now rival those in academia in terms of staffing and funding. They have surpassed them in access to proprietary data and computing power to run experiments on. That's attracting some of the field's most prominent researchers. LeCun, former director of New York University's Center for Data Science, joined Facebook in December 2013 to run its AI group. While still teaching a day a week at NYU, he has hired nearly 50 researchers; on June 2, Facebook said it is opening an AI lab in Paris, its third such facility. Google says its own AI team numbers in the “hundreds,” declining to be more specific. Baidu's Silicon Valley AI lab opened in May 2014, and now has around 25 researchers led by Andrew Ng, a former AI head at Google. The Chinese search giant employs about 200 AI specialists globally. The interest from deep-pocketed consumer Internet companies kickstarted a research boom creating “one of the biggest advances” in decades, says Bruno Olshausen, head of the Redwood Center for Theoretical Neuroscience at the University of California-Berkeley. “The work going on in these labs is unprecedented in the novelty of the research, the pioneering aspect.”

As far as tech money has pushed AI in recent years, computers are still pretty dumb. When talking to friends in a loud bar, you pick up what they're saying, based on context and what you remember about their interests, even if you can't hear every word. Computers can't do that. “Memory is central to cognition,” says Olshausen. The human brain doesn't store a complete log of each day's events; it compiles a summation and bubbles up the highlights when relevant, he says. Or at least, that's what scientists think. The problem with trying to create AI in our own image is that we don't fully comprehend how our minds work. “From a neuroscience perspective, where we are in terms of our understanding of the brain—and what it takes to build an intelligent system—is kind of pre-Newton,” Olshausen says. “If you're in physics and pre-Newtonian, you're not even close to building a rocket ship.”

Modern AI systems analyze images, transcribe texts, and translate languages using a system called neural networks, inspired by the brain's neocortex. Over the past year, virtually the entire AI community has begun shifting to a new approach to solve tough-to-crack problems: adding a memory component to the neuron jumble. Each company uses a different technique to accomplish this, but they share the same emphasis on memory. The speed of this change has taken some experts by surprise. “Just a few months ago, we thought we were the only people doing something a bit like that," says Weston, who co-authored Facebook's first major journal article about memory-based AI last fall. Days later, a similar paper appeared from researchers at Google DeepMind.

Since then, AI equipped with a sort of short-term memory has helped Google set records in video and image analysis, as well as create a machine that can figure out how to play video games without instructions. (They share more in common with kids than you probably thought.) Baidu has also made significant strides in image and speech recognition, including answering questions about images, such as, “What is in the center of the hand?” IBM says its Watson system can interpret conversations with an impressive 8 percent error rate. With Facebook's ongoing work on memory-based AI software that can read articles and then intelligently answer questions about their content, the social networking giant aims to create “a computer that can talk to you,” says Weston.

The next step is to create an accompanying framework more akin to long-term memory, which could lead to machines capable of reasoning, he explains.If a talking, learning, thinking machine sounds a little terrifying to you, you're not alone. “With artificial intelligence, we're summoning the demon,” Elon Musk said last year. The chief executive officer of Tesla Motors has a team working on AI that will let its electric cars drive themselves. Musk is also an investor in an AI startup named Vicarious. After some apparent self-reflection, Musk donated $10 million to the Future of Life Institute, an organization set up by Massachusetts Institute of Technology Professor Max Tegmark and his wife Meia to spur discussions about the possibilities and risks associated with AI. The organization brings together the world's top academics, researchers, and experts in economics, law, ethics, and AI to discuss how to develop brainy computers that will give us a future bearing more resemblance to The Jetsons than to Terminator. “Little serious research has been devoted to the issues, outside of a few small nonprofit institutes.

Fortunately, this is now changing,” Stephen Hawking, who serves on the institute's advisory board, said at a Google event in May. “The healthy culture of risk assessment and the awareness of societal implications is beginning to take root in the AI community.”AI teams from competing companies are working together to advance research, with an eye toward doing so in a responsible way. The field still operates with an academic fervor reminiscent of the early days of the semiconductor industry—sharing ideas, collaborating on experiments, and publishing peer-reviewed papers. Google and Facebook are developing parallel research schemes focused on memory-based AI, and they're publishing their papers to free academic repositories. Google's cutting-edge Neural Turing Machine “can learn really complicated programs” without direction and can operate them pretty well, Peter Norvig, a director of research at Google, said in a talk in March. Like a cubicle dweller wrestling with Excel, the machine makes occasional mistakes. And that's all right, says Norvig. “It's like a dog that walks on its rear legs. Can you do it at all? That's the exciting thing.”

Technological progress within corporate AI labs has begun to make its way back to universities. Students at Stanford University and other schools have built versions of Google's AI systems and published the source code online for anyone to use or modify. Facebook is fielding similar interest from academics. Weston delivered a lecture at Stanford on May 11 to more than 70 attending students—with many more tuning in online—who were interested in learning more about Facebook's Memory Networks project. LeCun, the Facebook AI boss, says, “We see ourselves as not having a monopoly on good ideas.” LeCun co-wrote a paper in the science journal Nature on May 28, along with Google's Geoff Hinton and University of Montreal Professor Yoshua Bengio, saying memory systems are key to giving computers the ability to reason about the world by adjusting their understanding as they see things change over time.

To illustrate how people use memory to respond to an event, LeCun grabs his magic pen from earlier and tosses it at a colleague. A machine without a memory or an understanding of time doesn't know how to predict where an object will land or how to respond to it. People, on the other hand, use memory and common sense to almost instinctively catch or get out of the way of what's coming at them. Weston, the Facebook AI researcher, watches the pen arc through the air and then hit him in the arm. “He's terrible at catching stuff,” LeCun says, with a laugh, “but he can predict!” Weston reassures him: “I knew it was going to hit me.”

I totally agree with the article. I also would like to add that we live in the time of technological progress and there are various techs how to make our kids smarter, stronger and safer. I use a tracking app mspylite which changed the life of our family.It's a spying app which helps you to track your kid's location and you can always be aware where your kid is.It's not the case of being too caring for the child and too paranoic, me and my wife just want to be aware that the kid is safe, not kidnapped, not in an inappropriate place.

ReplyDelete